“Trust but verify” is no longer an effective strategy in an information saturated world. When Ronald Reagan quoted the Russian proverb in 1985, it seemed clever enough; today it sounds hopelessly naive. If we are reasonably diligent executives or citizens, we understand and seek to avoid confirmation bias when important decisions are at hand. What can we do to compensate for the forces working against us?

“Trust but verify” is no longer an effective strategy in an information saturated world. When Ronald Reagan quoted the Russian proverb in 1985, it seemed clever enough; today it sounds hopelessly naive. If we are reasonably diligent executives or citizens, we understand and seek to avoid confirmation bias when important decisions are at hand. What can we do to compensate for the forces working against us?

It’s a cliché that we live in a world of information abundance. That cliché, however, has not led to the changes in information behaviors that it implies. We still operate as though information were a scarce commodity, believing that anyone who holds relevant data is automatically in a position of power and that information seekers depend on the holder’s munificence.

As data becomes abundantly and seemingly easily available, the problem for the information seeker changes. (It changes for the information holder as well, but that is a topic for another time.) The problem transforms from “Can I get the data?” to “What data exists? How quickly can I get it? How do I know I can trust it? How do I evaluate conflicting reports?†It is no longer simply a question of getting an answer but of getting an answer whose strengths and limits you understand and can account for.

The temptation is to fall into a trust trap, abdicating responsibility to someone else. “4 out of 5 dentists recommend…†“According to the Wall Street Journal…†“The pipeline predicts we’ll close $100 million…â€

There was a time when we could at least pretend to seek out and rely on trustworthy sources. We counted on the staffs at the New York Times or Wall Street Journal to do fact checking for us. Today we argue that the fact checkers are biased and no one is to be trusted.

Information is never neutral.

Whatever source is collecting, packaging, and disseminating information is doing so with its own interests in mind. Those interests must be factored into any analysis. For example, even data as seemingly impartial as flight schedules must be viewed with a skeptical eye. In recent years, airlines improved their on-time performance as much by adjusting schedules as by any operational changes. You can interpret new flight schedules as an acknowledgment of operational realities or as padding to enable to reporting of better on-time performance.

If we are a bit more sophisticated, we invest time in understanding how those trusted sources gather and process their information, verifying that their processes are sound, and accept their reports as reliable inputs. Unfortunately, “trust but verify” is no longer a sufficient strategy. The indicators we once used to assess trust have become too easy to imitate. Our sources of information are too numerous and too distributed to contemplate meaningful verification.

Are we doomed? Is our only response to abandon belief in objective truth and cling to whatever source best caters to our own bias. Fortunately, triangulation is a strategy well matched to the characteristics of today’s information environment.

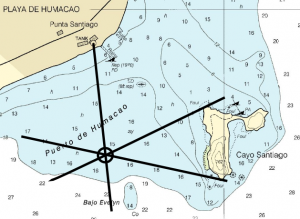

In navigation, you determine your location in relation to known locations. You need at least three locations to fix your current position. Moreover, you need to account for the limits of your measurement tools to understand the precision of your fix. In today’s world of GPS navigation, that precision might well be very high but still imperfect.

In organizational (and other) settings where you are attempting to make sense of—or draw useful inferences from—a multitude of noisy and conflicting sources, the principles of triangulation offer a workable strategy for developing useful insights in a finite and manageable amount of time.

In navigation, the more widely and evenly dispersed your sightings, the more precisely you can fix your position. Focus your data collection on identifying and targeting multiple sources of input that represent divergent, and possibly conflicting, perspectives. Within an organization, for example, work with supporters and opponents, both active and passive, of a proposed reorganization or systems deployment to develop an implementation strategy. When evaluating and selecting a new application, seek out a wider assortment of potential references, vendors, and analysts.

Triangulation also helps counteract the simplistic notion of balance that undermines too many narratives. Triangulation is based on living in a three-dimensional world; it cannot tell you where you are with only two fixes. We should be at least as diligent when mapping more complex phenomena.

A data collection effort organized around seeking multiple perspectives and guidance risks spinning out of control. Analyzing data in parallel with collection manages that risk. Process and integrate data as it is collected. Look for emerging themes and issues and work toward creating a coherent picture of the current state. Such parallel analysis/collection efforts will identify new sources of relevant input and insight to be added to the collection process.

Monitoring the emergence of themes, issues, and insights will signal when to close out the collection process. In the early stages of analysis, the learning curve will be steep, but it will begin to flatten out over time. As the analysis begins to converge and the rate of new information and insight begins to drop sharply, the end of the data collection effort will be near.

The goal of fact finding and research is to make better decisions. You can’t set a course until you know where you are. How carefully you need to fix your position or assess the context for your decision depends on where you hope to go. Thinking in terms of triangulation—how widely you distribute your input base and what level of precision you need—offers a data collection and analysis strategy that in more effective and efficient than approaches we have grown accustomed to in simpler times.

I’ve been writing at a keyboard now for five decades. As it’s Mother’s Day, it is fitting that my mother was the one who encouraged me to learn to type. Early in that process, I was also encouraged to learn to think at the keyboard and skip the handwritten drafts. That was made easier by my inability to read my own handwriting after a few hours.

I’ve been writing at a keyboard now for five decades. As it’s Mother’s Day, it is fitting that my mother was the one who encouraged me to learn to type. Early in that process, I was also encouraged to learn to think at the keyboard and skip the handwritten drafts. That was made easier by my inability to read my own handwriting after a few hours. Â I’m gearing up to teach project management again over the summer. There’s a notion lurking in the back of my mind that warrants development.

I’m gearing up to teach project management again over the summer. There’s a notion lurking in the back of my mind that warrants development. Â

One of my enduring memories from my first days in business school is a video interview of a second year student offering advice on surviving the case method. While I didn’t fully appreciate it at the time, it was totally appropriate that his advice was a case study in its own right.

One of my enduring memories from my first days in business school is a video interview of a second year student offering advice on surviving the case method. While I didn’t fully appreciate it at the time, it was totally appropriate that his advice was a case study in its own right. Our admiration for the assembly line is so deep that we are suckers for the promise of “proven systems†regardless of their feasibility. We so treasure predictability and control that the promise seduces no matter how many times it is broken.

Our admiration for the assembly line is so deep that we are suckers for the promise of “proven systems†regardless of their feasibility. We so treasure predictability and control that the promise seduces no matter how many times it is broken. There was a time when I wanted to get my hands on the syllabus for the “secret class;†the secret being how to navigate the real world outside the classroom. Think of me as a male version of Hermione Granger; annoyingly book smart and otherwise pretty clueless. Classes were easy; life not so much.

There was a time when I wanted to get my hands on the syllabus for the “secret class;†the secret being how to navigate the real world outside the classroom. Think of me as a male version of Hermione Granger; annoyingly book smart and otherwise pretty clueless. Classes were easy; life not so much.

Last time, we looked at the

Last time, we looked at the